Some thoughts on the future of marine towed streamer seismic acquisition for offshore oil and gas exploration.

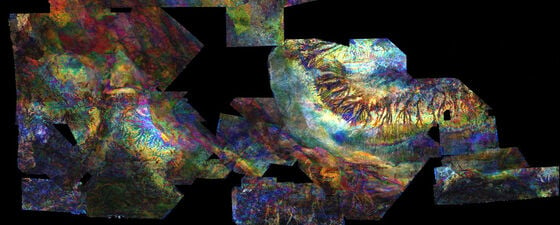

Polarcus XArray™ Triple source acquisition. © Polarcus.

Seismic acquisition and exploration technology have been in an arms race since day one. Equipment manufacturers have taken us from low channel analog recording systems to high-channel count digital systems with low-noise streamers. Processing houses have taken us from NMO stack to DMO and post-stack migration to pre-stack time migration and depth migrations and now full waveform inversion (FWI). In each case, one can argue that each step forward is countered by an alternative competing solution – for example, low-noise solid streamer countered by high-end de-noise workflows and similarly for broadband solutions. For processing and imaging, the driver behind new and competing algorithms tends to be access to cheaper and faster compute capacity. In each case, any advance, and hence commercial advantage, is quickly commoditized by an alternative, through either acquisition or processing or a combination of both. A true step change in seismic quality is difficult to see right now; high-end acquisition systems may well produce demonstrably better results, but not always where they are useful to the geologist − and they carry significant R&D costs.

So how are we going to progress given truly novel and useful innovation is a way off? In part, it will be the application of known science in new ways or just different ways of looking at the problem; knowing the problem and providing the simplest practical solution.

For example, as part of their drive on costs during the downturn, exploration companies have shortened the exploration cycle time – and here the ‘cloud’ offers solutions. For environmental factors, the humble near-field hydrophone gives new options if we understand how the seismic source works. For quality, 4D repeats can be improved in simple ways that decrease the risk. All-inall the future is about flexibility and adaptability to help de-risk the project, be it a large exploration survey or the smallest 4D repeat.

We discuss some interesting, and maybe unexpected, solutions in this article.

Seismic Data in the Cloud

Seismic operations room onboard a Polarcus vessel. © Polarcus.

By getting seismic data in the cloud – i.e. uploaded to a data center somewhere – we decouple the data location from where it is generated to where it is used. Simple, but the implications are wide ranging.

The simple aspect is that by uploading the data, we eliminate the time and possible delays it takes from getting the physical tapes from the vessel to the processing house and provide access to anyone and anywhere.

In addition to the acquisition-QC displays already sent off the vessel, access to additional data such as streamer separations, gun timings, currents and bathymetry, cloud seismic allows for more detailed analysis of the actual data. This means that onboard representative staff can be reduced and theoretically eliminated (OK, that is pie in the sky!), offering a reduction in both HSE risk and costs whilst increasing the ability of onshore experts to evaluate and monitor quality from anywhere.

Fast-track Processing Seismic Data: Frankenstein’s Monster

Maybe a harsh comparison to make but, like the original Frankenstein’s monster, fast-track imaging can be sometimes terrible and sometimes a life-saver; for example, initial amplitude scans and initial well-prognosis can both be concerns.

Fast-tracking suffers from three issues:

experience in the field;

supervison in the field;

and compute resources.

Acquisition project planning onboard. © Polarcus.

So, if we can upload seismic data from the vessel directly to the cloud it allows access to unrestricted compute resources: more time for testing as processing stages can be run much faster than onboard, enabling higher-end flows such as depth migrations and FWI. The processing center can be anywhere − a big screen and an internet connection are all that is needed − so oversight is easier to manage. Even without local processing, just being able to interactively examine the tests properly will help, including bringing in additional experts regardless of their location. What we will end up with then is super-fast slow-tracks where the interpreters can get going in just a few weeks after the last shot, reducing the cycle time.

A real advantage of seismic in the cloud is the ability to use the final processing center to QC the acquisition; whilst onboard data QC is sophisticated, it will not match the baseline processing in the onshore processing center. The processing contractor can then sign off on the acquisition, which will reduce the risk. Of course, having the data available and a known processing flow means having 4D results in no time − with a caveat.

Uploading to the cloud off the vessel has been accomplished, and the satellite links are stable enough. However, the satellite network is still limited by the rate of data transmission. To get around this, we must compress the seismic data. In the past compression has resulted in information being lost, but now technology is available that allows virtually loss-less compression. How do we define lossless? When we difference compressed and uncompressed data, we can see that the ‘loss’ is 60 dB down. More importantly, the spectrum of the difference is perfectly flat, indicating white noise containing no information or signal. For 4D the differences may be borderline, but compression levels can be varied to suit to eliminate risk.

Big is Beautiful?

Live quality control of ongoing acquisition. © Polarcus.

Another trend that is building within the industry is for smaller but more sources. Triple source acquisition has become ubiquitous since the reboot by Polarcus a few years ago. Implicit in this is the reduction in source volumes, as seismic vessels generally have six sub-arrays which can be used − and with the triple source, we go from three sub-arrays per source to two. Given the amount of data acquired with this design, there does not appear to be any concerns with the smaller output.

This can be explained by the decreased ambient noise recorded with solid streamers and better algorithms for removing the remaining noise, coupled with high-dynamic range recording systems. If we go for still smaller sources, we will start to lose the airgun tuning that drove up source sizes in the first place: the reduction of the ‘bubble’ rather than the increase in overall acoustic energy. The solution is to use the near-field hydrophone (NFH) to create shot-by-shot estimates of the source signature, which allows for accurate de-bubble in particular. With this, metrics such as the peak-to-bubble ratio become obsolete. Concerns over ambient noise will always be there but can be mitigated by increasing the shot sampling to more than compensate. In many cases, the ‘noise’ is, in fact, shot generated and therefore relative to it, as in the example of multiples. Tests have already been run with single cluster sources with some success, showing that smaller sources can be viable.

Smaller sources would reduce any adverse environmental effects, at least due to the peak sound level (SPL), but if we fire the airguns more frequently, which we would then need to do, the downside is little, if any, reduction in the sound exposure level, which reflects the level of background noise; for comparison, a motorcycle that backfires has a high peak sound level, while the background traffic noise is like the sound exposure level. However, like safety, reducing outputs to a minimum should be an essential ambition, and one that will be forced on the seismic industry at some point anyway.

We can use more sources to help our acquisition designs and increase quality. By using wide streamer spreads we gain efficiency but can create problems in the demultiple process with the lack of near offsets. The simple solution is to spread out the sources – wide-tow sources – to create a mix of near-trace offsets so that demultiple processes like SRME can work better and regularization can fill any holes (if any) to improve the near-surface imaging. This is an example of the ‘arms race’, where different software and hardware solutions have been developed in the past to deal with the poor near-offsets of wide spreads. Wide-tow sources offer an alternative, which, like many good solutions, is the simplest – and also removes proprietary acquisition and processing not so beloved by operators, especially their procurement departments!

4D monitor surveys can also be helped by the smaller, but measured sources. We can create source geometries that simultaneously replicate the baseline 4D geometry whilst also increasing the resolution and fold. With NFH data we can compensate for the different source signatures. We can even verify this through use of the cloud: upload data, run de-noise and de-signature, then compare. This would test not only that source characteristics match but also the compression, source-receiver position-matching and any other effects, known or unknown, that might affect the 4D response.

Hybrid Seismic Acquisition Surverys

Back-deck streamer deployment. © Polarcus.

There are advantages to the notion of combining streamers and nodes in that we take the benefit of the near offset density of streamers so we can increase the node spacing. Challenges will be there for the combination of such different datasets, but maybe that is not so important. Streamers will give access to data via the cloud very quickly and enable the velocity modeling to begin sooner, whether it be manual, tomography or FWI. Once node data is retrieved, shipped and loaded, it can be used to complete the velocity model building with long-offsets.

If the node separation is small enough then we can create an image dataset for the deeper section. Given that nodes can provide deep velocity control, then streamer lengths can also be reduced, depending on the node geometry and project objectives. Ideally, streamer and node data would be combined, but that will depend on many factors, driven one way or the other by the geology.

A Possible Future?

The cloud clearly offers some great options for the future, especially in the 4D world where even the smallest deviations could ruin the result. The concept of uploading data comes with a risk of what might be lost during compression – but this can be checked and quantified as required, and even if something slips through, the affected shots can be simply replaced at the processing stage. Or if the reservoir level interpretation (inversion) is particularly challenging, rerunning lines over the target area will just be a CPU burn and will remove any doubt. What fits in well with data compression is the multi-source style of acquisition where more sources do not increase the data volumes for compression, as the shots overlap, but more streamers do. Increasing source numbers and hence smaller volumes are enabled by accurate de-signature through NFH data.

So, the future will be driven by the humble near-field hydrophone. Who would have thought of that?