Artificial Intelligence (AI) has at least one feature in common with hydrocarbon exploration: a history of boom and bust. Other industries might complain about the unpredictability of demand for their products but generally do not have to contend with a market in which the price of their product fluctuates as wildly as that of crude oil. Oil exploration and production is notoriously boom and bust, for reasons that are too complex for most economists to understand. AI’s boom and bust is probably much easier to explain: excessive optimism.

Some Important History

AI is reckoned to have become a separate field of study in 1956, when the foremost USA researchers met at a summer school and gave the field its current name. As well as christening this new science, they made some predictions, broadly claiming that machines would be as intelligent as humans within a generation. This led to a huge influx of funding for research, notably from The Defense Advanced Research Projects Agency (DARPA) (i.e., the USA’s military), and then an equally dramatic loss of funding a few years later when the predicted benefits failed to materialise. This withdrawal was later called the first AI winter, but only after a second wave of optimism in the late 20th century had led to history repeating.

We are now experiencing spring once again, with green shoots of AI springing up all over. This year’s BBC Reith Lectures were on AI, specifically the dangers we will face when the machines eventually become more intelligent than us. So maybe we have learnt something: if not to dial-back on the optimism, then at least to look before we leap.

Is it really different this ‘year’? Will our winter of discontent finally be made glorious summer? In considering this question, past performance may not be the best guide to the future, but it’s not a bad starting point. So, some historical background should be useful; and understanding how AI works, at least at the heuristic level, should help us see where, in our industry and areas of specialism, we might expect to see the most change in the near future.

Benefits: The Internet and Search Engines

The first historical point to note is that AI has not actually been the failure implied by the periodic dramatic retreats made by the funders, although the successes have sometimes been of a different kind. DARPA’s funding of purely conceptual research, with no strings attached, gave us the internet, and the internet gained widespread acceptance through search engines, and the main search engine gained its dominance through use of AI. Or, on a less pragmatic level, it is said that research into AI has taught us as much about human intelligence and the nature of intelligence in general as it has about machine intelligence. A statement on the state of AI at the end of the last century was that we have taught computers to beat the world chess champion but not to perform tasks mastered by three-year-olds, such as natural language processing. At the outset, playing (and winning at) chess was seen as the pinnacle of achievement.

This is formalised in Moravec’s paradox, which notes that proving theorems and solving geometry problems (deductive logic) is comparatively easy for computers, but a supposedly simple task like recognising a face or crossing a room without bumping into anything (inductive logic) is extremely difficult. Mastering natural language processing is another example of a stumbling block for AI. The following pair of statements illustrates some of the potential difficulties: Time flies like an arrow. Fruit flies like a banana.

How could a computer be taught to distinguish the different uses of ‘flies’ and of ‘like’? Simple rule-based systems find this (almost) impossible. But in conjunction with access to the entire corpus of literature in the English language for comparative testing, AI can use ‘the wisdom of the crowds’ to distinguish intention from context, and hence deduce, at least probabilistically, intended meaning.

AI has achieved its major successes by taking advantage of breakthroughs unavailable to those researchers attending that summer school in 1956:

- access to data: the internet makes available vast quantities of data (although not all would agree on describing it as ‘information’);

- computing power: Deep Blue, the IBM computer that defeated then-reigning world chess champion Gary Kasparov in 1997, was at least 10 million times faster than the fastest 1956 computer;

- evolution and development of the mathematical basis for AI, including new tools and borrowing tools from other disciplines, notably mathematical statistics;

- commercial drive, e.g., from makers of phones (wanting to improve typing speeds by utilising predictive texting or auto-correct) and voice-controlled devices, creating measurable goals and provable outcomes which result in huge profit if successful.

Expert Systems to Machine Learning

Expert systems had a rule-base or knowledge base: rules such as ‘If the temperature drops below zero then water is likely to freeze’. Adding rules for the dependence on pressure and presence of impurities in the water, together with temperature sensors and pressure sensors and possibly even impurity composition detectors, or at least inputs of concentrations, and an expert system could easily handle tasks previously allocated to humans, with the benefits of more efficiency, less mistakes and greater speed of decision-making. In fact, the period of ‘failure’ in the last quarter of the last century left us with many embedded AI systems, quietly doing their jobs and making rigs and platforms, for example, safer places to work. There is only so far that an Expert System can go, however. Rules can cover some closed systems which seem quite complicated, but they have no hope of success at, for example, driving a car. The number of rules is just too great. A different paradigm was needed.

Neural Networks

Neural Networks were so called because they were intended to mimic the way human brains work, although the shared name is probably the greatest similarity between the two. Artificial Neural Networks (which we shall call NN for short, technically we should perhaps use ANN) are more of a concept or idea than a specific mathematical procedure.

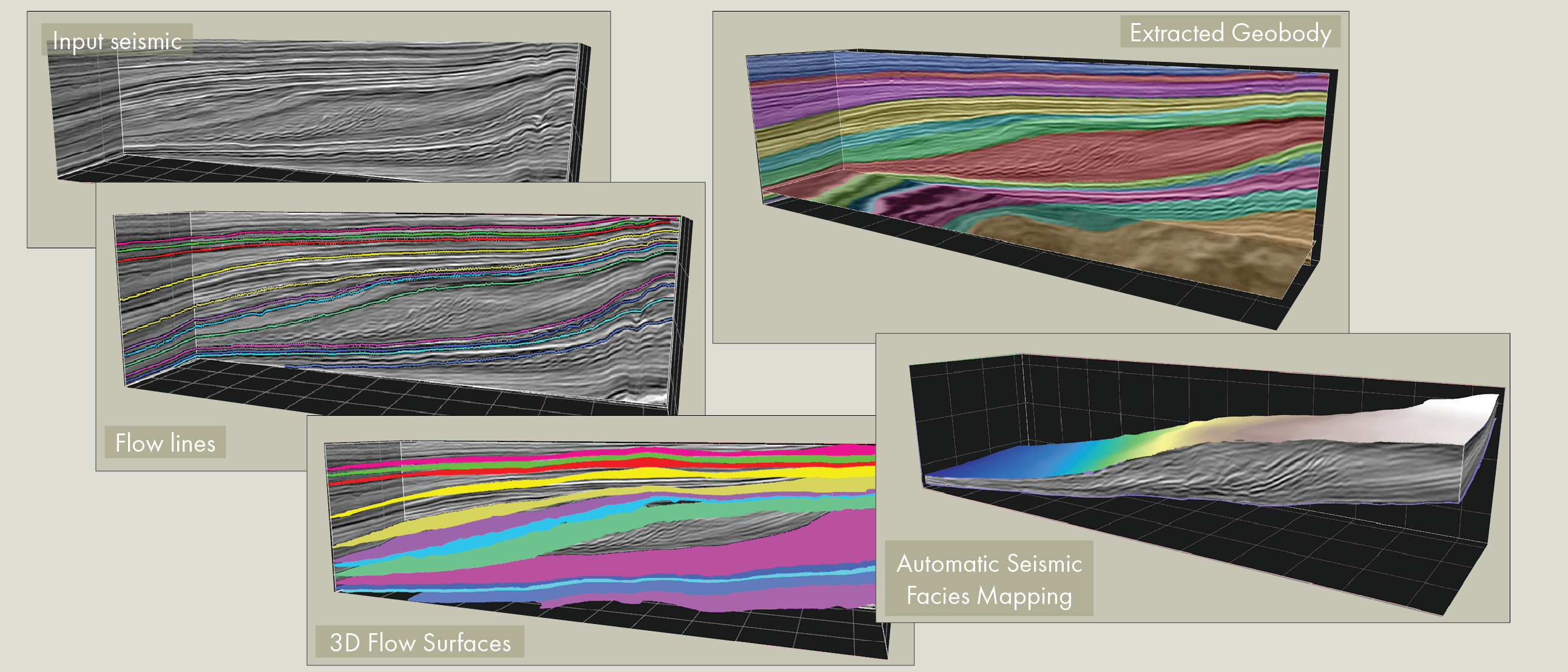

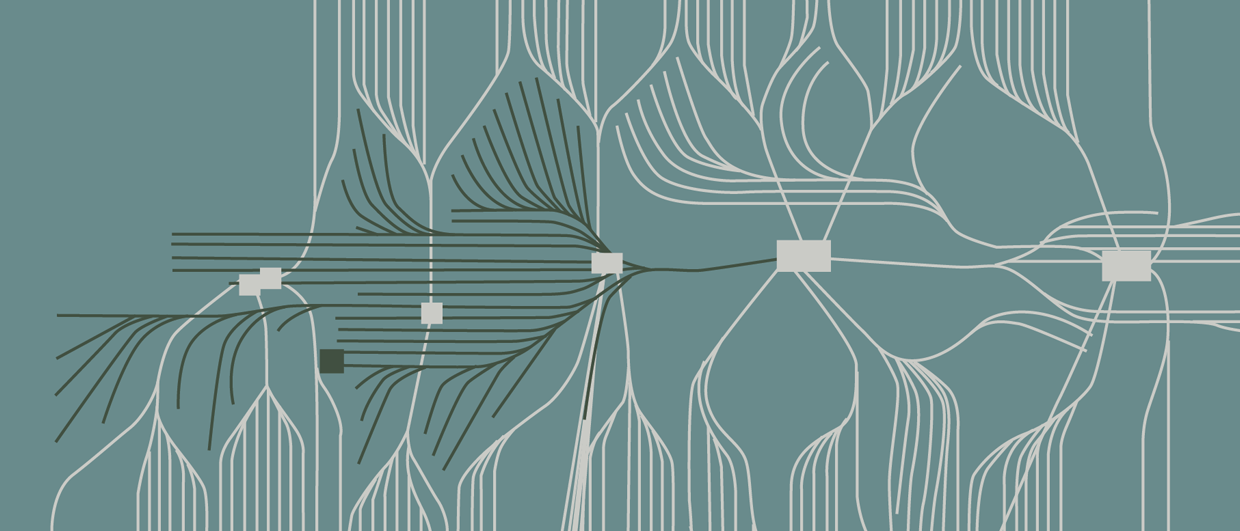

The diagram used to illustrate NN is nearly always akin to this:

which may perhaps be summarised as: ‘There are inputs, there are outputs, and between, there be dragons’. To be useful, a diagram should help explain: what is this diagram intending to explain?

To take a step back, before NN we could perform similar procedures, of taking an input and finding a way to use data to make a prediction. The simplest would be linear regression, still the mainstay of prediction from data. This replaces ‘here be dragons’ with ‘find the linear prediction that minimises the (squared) errors in the output’.

This was, however, inadequate in all but the simplest situations. Archie, for example, relied on a two-step process to come up with a formula (‘best prediction’ or, in NN terms, ‘output’) for predicting an empirical quantitative relationship between porosity, electrical conductivity, and fluid saturation of rocks from inputs.

Where R0 = resistivity of the sand when all the pores were filled with brine, Rw = resistivity of the brine, 0 is the porosity fraction of the sand and m is the slope of the line representing the relationship under discussion.

For Archie, the dragons would be replaced by finding a ‘best’ transformation, to get the formula’s form, and then the best linear prediction, having transformed, to find the factors (cementation exponent, saturation exponent) in any specific situation. For us, this is now a simple two-step process, one step followed by another and then the result, but for Archie it was a partly trial-and-error process. He would, of course, have been guided by his knowledge of the physical process involved. That would have made him try inverse relationships and non-linearity (raising to the power ⅟ₙ ), so it wasn’t simply trial-anderror, but partly so.

A computer, on the other hand, would have no knowledge of the process, so would have to rely entirely on trial and error. In return, it more than compensates by being able to conduct many more trials and quickly compute the errors. Each trial would involve trying a transformation, or formula form, and finding out how well it makes a prediction using the best possible factors or exponents. If the computer kept trying each combination, of formula form and best exponents in that formula, independently, it would be ignoring an important source of information: if one is worse than the previous, then it’s going in the wrong direction. So, the two steps should communicate. If they do, we have a simple two-layer back-propagation Artificial Neural Network.

Linear regression is readily extensible to non-linear and multiple regression but, ultimately, there are only so many ways of picking trends or conclusions from data, and critically, the principle of correlation or coincidence lies at the heart of all those we currently know; it minimises the errors, and that is what we want to achieve: the least error. Machine Learning and Deep Learning are therefore, at heart, generalisations of the familiar processes of correlation and regression.

Problems: Conceptual Rather than Mathematical

When Archie was performing his non-AI investigation, he knew which formula form and which combination of parameters performed the best: it was the form and combination that gave the best prediction of water saturation for the resistivity data he had available. In other words, he knew the answer he wanted and so had a way to measure ‘error’ and had a meaning for ‘best’. This was because he had sufficient data, from wireline logs

In other situations, data may not be necessary. Due to its wide exposure in the popular press, one of the best-known examples of deep learning is AlphaGo, a program that taught itself to improve at playing Go and then defeated the reigning world Go champion. In terms of complexity, this was a greater achievement than Deep Blue’s success at chess. And yet in some ways, the developers of AlphaGo had a comparatively simple task, because ‘best’ is so easily described: the best result is a win at the game. There are no points for ‘how you played the game’, it is all or nothing. Thus, it is, conceptually at least, easy to set up a computer to play a very large number of games of Go and have the software remember, or ‘learn’ in the current jargon, that moves that lead to a win are better than moves that lead to a loss.

Objective Function

However it is obtained, whether from theory or from a combination of data and experience, any learning algorithm requires a definition of what is good and what is bad: it needs an ‘objective function’ that can quantify the value of an output and hence tell it whether it is on the right lines or barking up the wrong tree. We have seen two possible sources of an objective function (AlphaGo’s win/lose and Archie’s data), a judgement of what constitutes ‘best’. This judgement is necessary; without it, there can be no learning, and in the absence of an ‘Ask Solomon’ command in computer software, we have to rely on either rules (theory) or a combination of data and experience.

It is in the potential pitfalls of the choice of an arbiter of good versus bad that machine learning is most commonly criticised. Many of the commonly cited examples are, if investigated, often found to be apocryphal. An enduring urban legend is attributed to the US Department of Defence (DoD): during the Cold War it is said that the DoD tried to train an AI to differentiate friendly tanks and enemy tanks so that missiles could be fired automatically. Demonstrations were promising but trials were disastrous, as friendly tanks were targeted by the AI. Various versions of the story will say that this was because the AI was trained on photos in which all friendly tanks had been in fields and enemy tanks in forests, others that friendly tanks were photographed in the morning, enemy tanks in the afternoon. Thus, the AI was differentiating on the presence or absence of trees, or the direction of shadows. It is so enticing a story that it still gets repeated despite having no foundation. There are nevertheless sufficient real mistakes to indicate lessons have to be learned. One recent example of USA government facial recognition software was found to be 98% accurate with Caucasian males but barely better than guesswork with some other racial types. Whilst that error can be readily seen, how will we know, just by looking at the outputs (results), whether the AI we have put in charge of exploration is, for example, using data from carbonate fields to make judgements about clastic reservoirs? The ability to interrogate an AI, to ask it why it made its choices, is currently missing, and with each increase in ‘depth’ of a Deep Learning system, it becomes more difficult to achieve. In the absence of any way to interrogate the AI, we, the experts, are still necessary, at least for the time being, to critically appraise the learning process.

So, we have a tool of great power for which there is strong pressure to put to good use, but we know it has limitations.

How can we use our knowledge of the application domain (i.e., exploration geology and geophysics) to minimise the chances of targeting the ‘wrong tanks’? The answer should lie in having a good understanding of the technology, but also in a little common sense. By way of example, in applications involving sequences, from lithostratigraphy to seismic interpretation, we know that a perfect fit in one sequence is not of use if it means the surrounding sequences cannot be reconciled. AI has also noted that this is a problem, and in AI this is sometimes known as the buttons problem.

In summary, we should be the best source of knowledge of the problems, from having worked in the field and seen the data. Handing over everything to the computer without also handing over this experience is a recipe for failure. We will explore this theme some more in Part 2.

Thumbnail image: Shutterstock

More In This Series…

Artificial Intelligence – Its Use in Exploration and Production

Dr Barrie Wells, Conwy Valley Consultants

Part 2 – Machine Learning: magical or mathematical statistics? De-mystifying AI and setting it in a geo-exploration context.

This article appeared in Vol. 19, No. 2 – 2022