It is almost thirty years since I started reservoir characterization and stochastic modeling. Over the last three decades I have had plenty of opportunities to watch how this modeling technology is being used and there are some days when I feel as though Pandora’s box has been opened. Before we start let me just define that by model I mean some 3D representation of a reservoir, most typically as a geocellular model.

What surprises me most is the unsuitability of the models that are being made for the questions that are being asked. As static modeling became possible during the nineties, the concept of a ‘shared-earth model’ was proposed and embraced: a single model that could be viewed by anyone from any discipline, which would ensure that everyone was thinking about the reservoir in the same way. Calculations could be made on this model by any of the geoscientists or engineers, knowing that they would be consistent with the other disciplines. A simple thought experiment may highlight the folly of this approach.

A Shared-View Model?

Figure 1: Wing loading test on a Dreamliner. Aeroplane designers and engineers do not use single shared-view models, so should geoscientists? (Source: © Boeing)If we try to imagine a perfect model, this would behave in exactly the same way as the real reservoir. Every interrogation of the model would give the same answer as investigating the real reservoir. This is manifestly improbable even for the simplest reservoirs. How can we get around this difficulty? If we relax our requirement that the model is perfect to just ‘good’, then we would still be expecting ‘good’ answers to the same wide range of questions. I think that ‘good’ means ‘close to the real model’s response’. The simplest solution is to frame the problem and restrict the range of questions for which our model is valid.

Figure 1: Wing loading test on a Dreamliner. Aeroplane designers and engineers do not use single shared-view models, so should geoscientists? (Source: © Boeing)If we try to imagine a perfect model, this would behave in exactly the same way as the real reservoir. Every interrogation of the model would give the same answer as investigating the real reservoir. This is manifestly improbable even for the simplest reservoirs. How can we get around this difficulty? If we relax our requirement that the model is perfect to just ‘good’, then we would still be expecting ‘good’ answers to the same wide range of questions. I think that ‘good’ means ‘close to the real model’s response’. The simplest solution is to frame the problem and restrict the range of questions for which our model is valid.

What happens in other disciplines that use computer models? Let’s look at aerospace. Before the Boeing Dreamliner first flew millions of hours of computer simulations were run. But were they run on a single shared-view model? No, different models were built for different questions, from the time it would take to evacuate the passengers, to the strength of the wing joints. Then many of these simulations were tested mechanically and the results were used to recalibrate the model. Figure 1 shows just how far you can bend the wings on a plane, so don’t get stressed if you see them wobble a bit in flight!

So, the aerospace engineers build specific models for specific questions. Then, when they have real data, they don’t just add this to the model like we add a new well to a reservoir model. They work out the difference between their predictions and their measurements and use this to determine a confidence in their predictions. This, to me, appears quite a different philosophy to reservoir modeling. Geoscientists receive new data from new wells, include them in the model, rebuild the 3D grid, and then their model is ‘right’. Wrong! Each time new data is added there is an opportunity to assess the predictive capability of your model. This important step is usually overlooked.

Modeling Aims

If we subscribe to the concept that the desire to build a shared-earth model adds to our woes, we now need to think more carefully about the aims of our modeling project, the best way to frame this and how we can ensure that our model is only used within the appropriate frame.

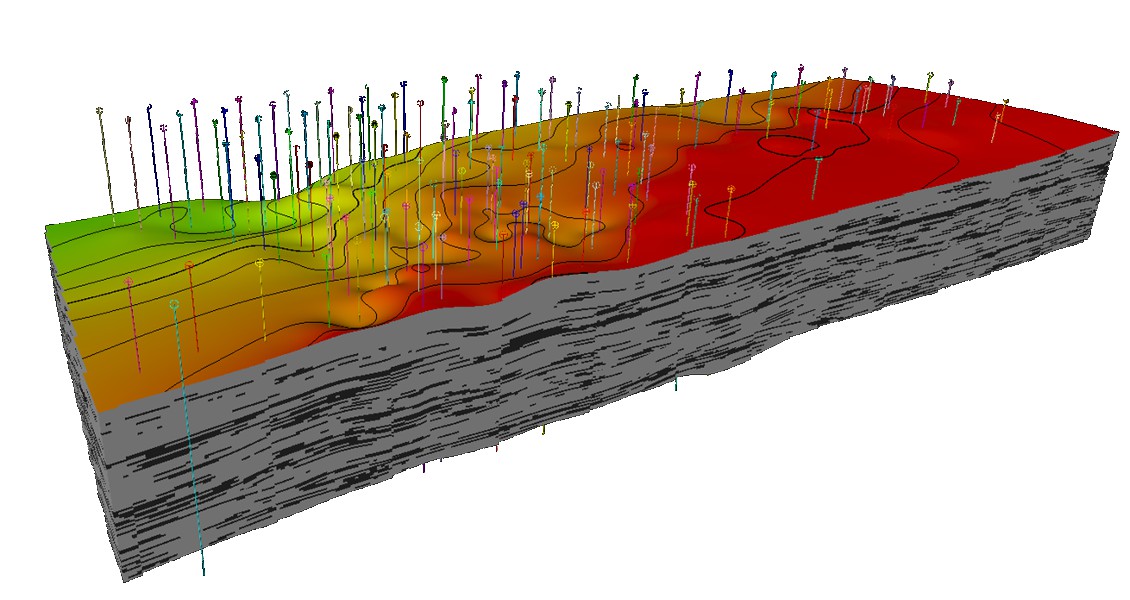

Qualitative: I think the most important contribution that reservoir modeling has had over the last three decades is in communication. Visualization is very powerful and 3D images of reservoirs really help everyone understand many different aspects of the problems that their colleagues in different disciplines face. So building a model with the aim of providing a visualization of the reservoir seems to be a good aim. It helps get investors, both internal and external, onside because they now understand or can picture what you are trying to do. But this model should be tattooed to show that it is for qualitative use only.

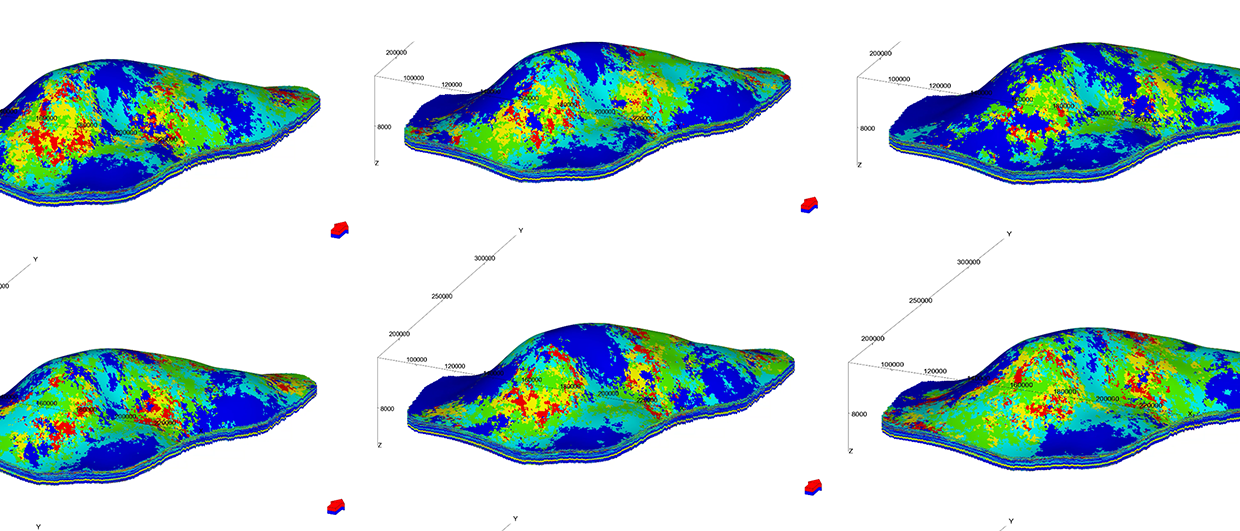

Quantitative: If we want to use the reservoir model for quantitative aims then we have to come back to the concept of predictive capability and rigorously and fastidiously quantify and improve on this. I think that we also need to be much more careful with our framing. Here, I will focus on two main quantitative modeling aims: volumetrics and flow simulation.

Let’s look at simulation first, since flow simulation precedes static reservoir modeling by almost 10 years. How can you frame your modeling project to make the predictions more accurate? The general technique would be to make smaller models; single well models and sector models reduce the number of parameters in the solution space and therefore the complexity of the problem. Models over a smaller volume allow you to increase the resolution without blowing out your computer memory. Radial models and 2D models were all the rage in the ’80s; I know that computers were much smaller then, but this forced engineers to think about the appropriate scale for modeling and to build up an understanding of the reservoir by looking at individual wells. Sadly, I don’t see many single well models and 2D models any more. Perhaps the workflows make it easy to create full field models.

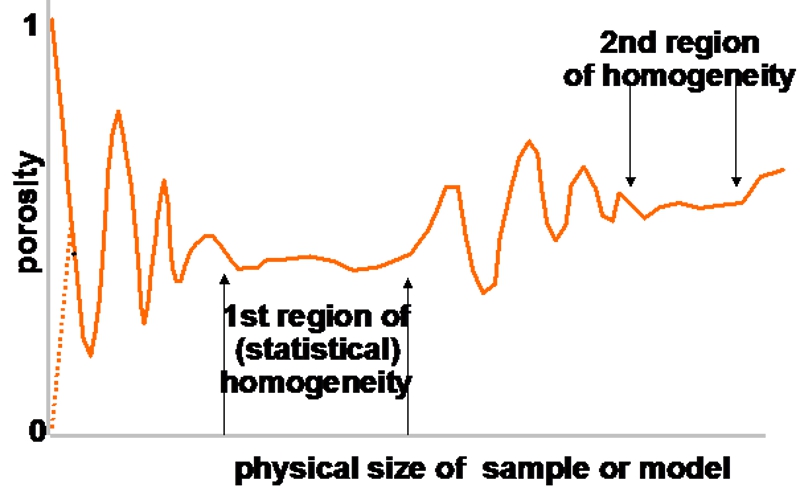

Figure 3: Homogenous scale ranges. (Source: after Bear, 1972)Static reservoir models are most often used to compute reservoir volumes. An important task and an admirable aim, but increasing the resolution of your reservoir model only improves the precision of the calculation, not the accuracy. To make your life easier you have to consider the well-known diagram shown in Figure 3 by Jacob Bear, of homogeneity as it relates to scale. If you consider any 2D or 3D system of sand and shale, then measurements at a certain probably small volume may be either 100% sand or 100% shale. At a different measurement volume you may find 50% sand and 50% shale (or some other fixed proportion) in almost every sample.

Figure 3: Homogenous scale ranges. (Source: after Bear, 1972)Static reservoir models are most often used to compute reservoir volumes. An important task and an admirable aim, but increasing the resolution of your reservoir model only improves the precision of the calculation, not the accuracy. To make your life easier you have to consider the well-known diagram shown in Figure 3 by Jacob Bear, of homogeneity as it relates to scale. If you consider any 2D or 3D system of sand and shale, then measurements at a certain probably small volume may be either 100% sand or 100% shale. At a different measurement volume you may find 50% sand and 50% shale (or some other fixed proportion) in almost every sample.

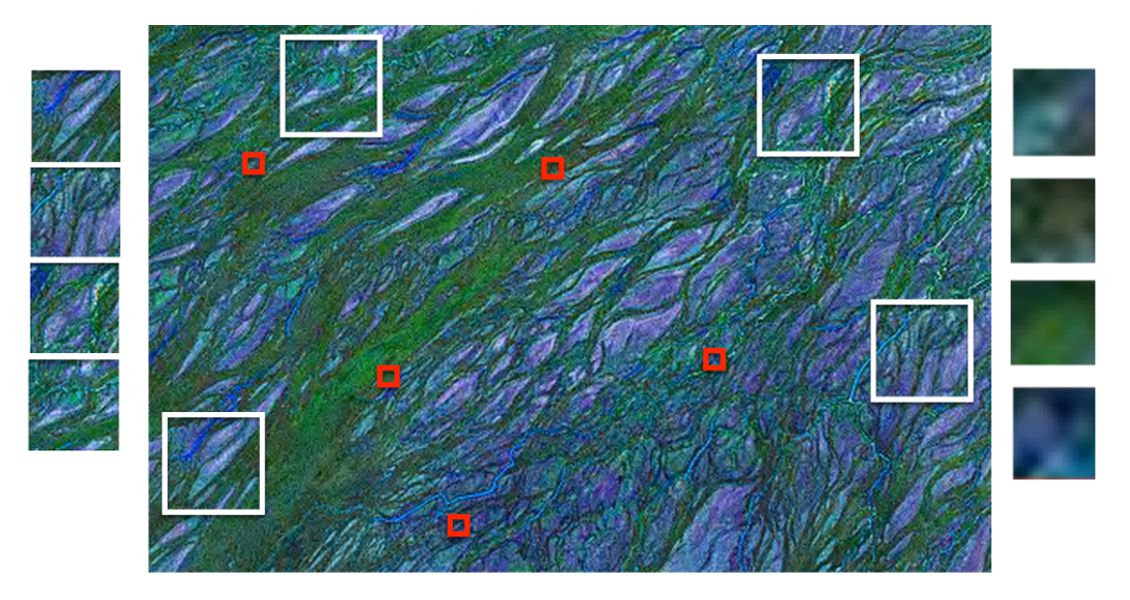

Figure 4 shows a satellite image of a braided stream. Looking in each of the red squares, which have been enlarged to the right of the main image, you see predominantly either green or blue pixels, but looking in each of the larger white squares, enlarged to the left, you will see a more consistent mixture of green and blue pixels. If you then model at the white scale your modeling task is massively simplified. I have seen two companies with adjacent acreage with one modeling at a homogenous scale and the other agonizing to create a model at a scale that had a much larger variance. And the impact on the volumetrics calculation of the two approaches? Well, when you look at errors in the velocity model and some of the petrophysical parameters and then run some uncertainty modeling there’s almost no difference in most cases – except you’ve saved about six months’ work.

Model Types

Over the years I have been involved in a lot of different modeling activities and this has led me to classify reservoir models according to their purpose. Generally models lose utility if they change purpose. Going back to the shared-earth model, I think there is a very significant value in collecting all the data from a reservoir or field or region in a single location, in being able to cross-reference this data and ensure its domain and referential integrity. This often involves visualizing objects from different disciplines in the same graphical space and is an absolutely vital activity. I would prefer to be a little pedantic and call this a database and as far as possible exclude modeled data from this, i.e. the 3D grid. If you insist on calling this a model, then it would be a ‘Museum Model’. Stuff is collected, categorized and stored in the museum. Geoscientists can look at the museum and find out about the exhibits but only the museum’s curators can change it. More mature reservoirs have better museum models.

At the other end of the spectrum is what I call the ‘Forensic Model’. The data is limited. You have to work quickly to realize an opportunity. The reservoir is like a crime scene and you’re looking for clues. You approach the problem with the open mind of a seasoned detective and quickly eliminate the usual suspects. It is very important to focus in on what really matters so your model tends to be light and small. You are trying to understand anomalous behavior to make better decisions. You have fastidiously collected all the data from the crime scene and you need to start testing your theories as to what might be happening. At this point do you create a full-field model?

No, we don’t, we try to restrict the scope of the model to answer the simplest question that will confirm or refute our hypothesis. And then build the ‘Hypothesis Model’. By choosing to build models to answer simple questions we can build up a more reliable predictive capability, albeit limited in scope. Let’s look at a simple point: knowing that sand bodies connect together differently in 2D and 3D systems, the probability of connectivity between wells depends on the net-to-gross, the shape of the sand bodies and the dimensionality of the system. A series of very simple models can be made to determine whether the system is 2D or 3D and test this hypothesis.

Identifying the most important questions about a reservoir’s behavior and designing appropriate experiments to test hypotheses sounds like a sensible strategy. It is how science has progressed since the Renaissance. Scientists do not build big complicated models of everything. Engineers do not build big complicated models of rockets and cars and planes. Why do geologists?

The Future

We will certainly see more reservoir models as the industry appears addicted to them. Undoubtedly resolution will get finer and will approach multi-gigacell static models. In the meantime I hope to see some real movement towards verification, validation and acceptance testing of geological models, as well as new ways to quantify the predictive capability of models. We need an industry-wide aim to improve this by fastidiously assessing the overall quality of the model as new information becomes available, rather than blind addition and update workflows.