Decoding Nature’s Disorder

Information is the resolution of uncertainty. Claude Shannon.

Talk clearly and not so far-fetched as a cipher. Medieval – origin unknown.

In many languages the word ‘cipher’ means ‘number’. However, the current meaning of ‘cipher’ in English – an ‘algorithm for performing encryption or decryption’ – can be traced back to the time when it meant ‘zero’ and the concept of ‘zero’ was new and confusing.

Figure 1: Military Enigma Machine. The three (of five) rotors with 26 positions each are clearly indicated.

In the military barracks of Bletchley Park in Buckinghamshire, UK, one of the world’s first computers (the so-called ‘Bombe’) was constructed for the single purpose of cracking military codes generated by the German Enigma encryption machine (Figures 1 and 2). Combining three rotors from a set of five, each rotor setting having 26 positions, and a plug board with ten pairs of letters connected, the Enigma machine had 158,962,555,217,826,360,000 (nearly 159 quintillion) different settings. Even a very fast modern computer could not systematically go through all those settings. The British had access to an Enigma machine but did not know the settings, which varied daily. A team of highly talented people working with the brilliant mathematician Alan Turing managed to crack the German codes in an ingenious way that involved realizing that the Germans would always start their encrypted radio transmissions with a weather report. This observation combined with groundbreaking advances in statistics allowed Turing and his team to limit the possibilities and guide the ‘Bombe’. The story of Alan Turing is as fascinating as it is tragic; only recently has the true nature of his genius and his impact on the 20th century been recognized. Breaking the Enigma code is believed to have shortened WWII by two to four years and saved millions of lives.

Figure 2: Replica of the ‘Bombe’ decoding machine built by Turing at Bletchley Park during WWII. (Source: Tom Yates/Wikipedia)

The goals for encrypting or encoding messages and signals have been and always will be as varied as they are interesting: from communicating securely with the front-line in war time, to increasing the communication capacity in cellular phone networks. A ‘bit’ of information represents the resolution of uncertainty between two distinct states/symbols. N bits of information resolve or distinguish between 2N different states or symbols.

In 1948, Claude Shannon calculated that the maximum amount of information that can be reliably communicated between a single source and receiver is given by the formula:

where S is the received signal power, N is the noise power, and information is measured in bits per second per Hertz of bandwidth available for transmission (Shannon, 1948; Simon, 2001). Note that Shannon’s equation is a statement about the channel through/over which the information is communicated. It does not say anything about the source that is producing the signals or messages. The average information produced by a source is called the entropy of the source and is also measured in bits. For a source producing n discrete symbols with probability pn, the entropy is computed as:

The concept of a measure of the average information content produced by a source is extremely important and parallels concepts such as structure or sparseness, which we will briefly get back to in the next instalment. We will now look at what limited hopes we have when encoding signals if we ignore or do not know the statistics of the signals produced by the source.

Encoding, Decoding and the Welch Bound

Often one does not have access to the source signals per se (i.e., one cannot encode them), but it is the structure in the source signals, together with a multiplicity of measurements, that enables the decoding or separation. In many other cases, however, one does have access to the source signals and the question arises as to whether one can do better when encoding the source signals and if one can do away with the requirement of multiple measurements. One strategy could be to encode the source signals with a unique time-series, each of which has both low autocorrelation (apart from the central peak) as well as mutual cross-correlation properties. The individual source wavefields can then be obtained from the encoded simultaneous-source signal simply by cross-correlating the recorded data with the respective encoding sequence. In 1974, L. R. Welch derived a lower bound on the maximum cross-correlation of signals. This bound shows that, as one might expect, the maximum cross-correlation and off-peak autocorrelation values of a set of sequences cannot be arbitrarily low.

Consider a set of M general, complex-valued sequences {an}, {bn}… {mn} of length N, the discrete aperiodic/nonperiodic correlation function between a pair of sequences is defined as:

When a=b, this equation also defines the discrete aperiodic autocorrelation function. Let Cam denote the maximum out-of-phase (i.e. off-peak) autocorrelation value and Ccm denote the maximum cross-correlation value. Then the Welch bound is derived as follows (Welch, 1974):

The Welch bound is a very useful result since it tells us, given the number of sequences in the set (M) and the length of the sequences (N), how low the maximum cross-correlation and off-peak autocorrelation values can be. Sequence sets which achieve the Welch lower bound exactly are known as Welch bound equality sets, the best-known of which is the Kasami sequence set.

Nature’s Way of Encoding

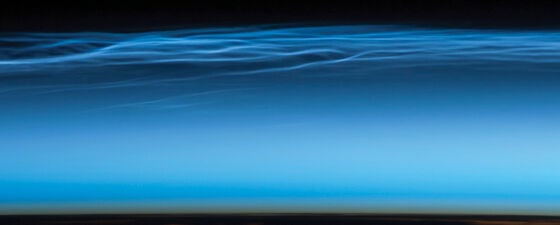

It turns out that random noise can be an extremely effective natural encoder. While earthquakes are recorded only intermittently, a seismic background noise wavefield is present and being continuously recorded. Usually, the exact origins of this wavefield are unknown. One source can be attributed to atmospheric pressure variations, which induce water waves that convert into low-frequency microseisms (faint earth tremors caused by natural phenomena) when crashing onto the shore. Despite the relatively low amplitude level, the continuous and random nature of such a background wavefield makes it possible to correlate it between receiver stations.

Although the existence of these noise bands have been known for more than half a century, it was only in the early 2000s that seismologists realized that, by cross-correlating such noise wavefields at different receiver stations, a wealth of information about the structure in between the correlated receivers can be extracted in the form of the interreceiver Green’s function. This process of cross-correlating (noise) data recorded at different receivers is now known as interferometry and can also be thought of as turning one of the receivers into a virtual source (Figure 3).

Figure 3: Snapshots of the normalized amplitude of the ambient noise cross-correlation wavefield with TA station R06C (star) in common at the centre. Each of the 15–30s band-passed cross-correlations is first normalized by the rms of the trailing noise and fitted with an envelope function in the time domain. The resulting normalized envelope function amplitudes are then interpolated spatially. Two instants in time are shown, illustrating clear move-out and the unequal azimuthal distribution of amplitude. (Reproduced from Lin et al., 2009, with permission from Profs. Lin and Snieder)

As noise data from any pair of receivers can be crosscorrelated, the number of virtual sources and receivers that can be created using this method is proportional to the square of the number of receivers. It turns out that such inter-receiver Green’s functions constitute ideal datasets for high-resolution surface wave tomography in regions where there is ample background noise and many receivers but few earthquakes. Thus, nature had conveniently encoded the information for us, but it took us some time to understand how to decode it! A similar strategy for interferometric modeling of Green’s functions between a large number of points in the interior of a model has been proposed by van Manen et al. (2005). In that case, however, when the data does not come for free the Welch bound predicts that the quality of the data after separation of the simultaneous simulation is proportional to the square root of the simulation time (Figure 4).

Figure 4: (a) Snapshots of the multiply-scattered reference wavefield when placing a source in one of the receiver positions (blue triangles). (b) Retrieved Green’s function (blue) obtained by cross-correlating and stacking an increasing number of 65,536 sample segments of noise-encoded wavefields recorded at the receiver locations versus the reference waveform (red). As expected from Welch’s bound, the quality of the decoding improves as the square-root of the number of segments stacked (or the total length of the simulation).

Figure 4: (a) Snapshots of the multiply-scattered reference wavefield when placing a source in one of the receiver positions (blue triangles). (b) Retrieved Green’s function (blue) obtained by cross-correlating and stacking an increasing number of 65,536 sample segments of noise-encoded wavefields recorded at the receiver locations versus the reference waveform (red). As expected from Welch’s bound, the quality of the decoding improves as the square-root of the number of segments stacked (or the total length of the simulation).

Multiple Scattering: Friend or Foe?

Multiple scattering adds significant complexity to the Green’s functions (impulse responses). Therefore, if one considers every impulse response to be a realization from one or more stochastic information sources, one could say that the multiple scattering significantly increases the entropy of the information sources. Another way to say this is that the number of degrees of freedom in the source signals increases dramatically if we know that the signals do not only consist of, e.g., primary reflections. Fortunately, in the interferometric applications, we do not have to worry about this additional complexity when reconstructing the virtual source responses in ambient noise surface wave interferometry or when encoding Green’s functions between points in the interior, since interferometry intrinsically reconstructs the full Green’s function.

It is also interesting to consider the role of multiple scattering when it is present in the medium through which one wants to communicate, i.e. when the multiple scattering is part of the communication channel and not the source signals. Until the late 1990s, the reigning paradigm was that multiple scattering hinders communication and lowers the maximum rate of communication, rather as the noise term in Shannon’s equation lowers the information capacity. It turns out that the opposite is true. A first clue came in the classic paper by Derode and co-workers from 1995, who demonstrated that it is possible to time-reverse a high-order multiply-scattered wavefield through the actual scattering medium, to achieve super-resolution focusing at the original source location. The multiple scattering effectively enlarged the aperture of the linear array of transducers such that focusing well below the free-space diffraction limit was achieved. Around the same time, a researcher at Bell Labs named Gerry Foschini realized that the multitude of paths in a scattering medium actually help to increase the rate at which information can be transferred. Conceptually this can be thought of as sending different messages over the different multiple scattering paths (Figure 5) (Simon et al., 2001). What is more, the encoding and decoding algorithm that Foschini proposed, which realizes the higher rates of communication, does not require knowledge of the details of the scattering environment. When keeping the total transmitted power constant, but using MT transmitters and MR receivers, it turns out the channel capacity can be roughly MT as large.

Figure 5: Scattering can enhance communication. In the absence of the scatterer, a single linear combination of the blue and the red signals is measured. The scatterer causes a second, different linear combination of the blue and the red signals to be measured. With the antennas displaced slightly, it becomes possible to separate the signals using an inverting linear transformation and increase the transmission rate. Modified from Simon et al. (2001).

These developments come full-circle in a more recent contribution by Derode et al. (2003), which shows how to exploit multiple scattering when communicating with time-reversal antennas. Taking advantage of the super-resolution enabled by acoustic time-reversal, they can transmit random bit series to receivers that are only a few wavelengths apart. In contrast, in a homogeneous medium, the communication breaks down completely as individual bit series are no longer resolved (Figure 6). The transfer rate is directly proportional to the number of eigenvalues of the time-reversal operator at a given frequency and increases with increasing multiple scattering.

Figure 6: (Left): Experimental time-reversal setup (plan view) consisting of a forest of steel rods. Initially, a source is placed at the location of each receiver (at x=-30m) and the impulse response recorded on a 23-element array of transducers (at x=30m). (Right): The scattered signals are time-reversed and used to encode a bit stream. Through a multiple-scattering medium (top) five short pulses very well focused on each receiver can be observed. Through water (bottom), the pulses overlap, indicating a strong cross talk between the receivers. After Derode et al. (2003).

Thus, to answer the question posed above: if you are trying to recover the multiply-scattered wavefield between two arbitrary receivers, and you are measuring in the presence of a strong and somewhat uniform ambient noise field, chances are that nature has already encoded the desired wavefield for you and all you will have to do is measure the ambient noise field for long enough at the receivers, and decode it using interferometry (cross-correlation), implicitly making use of Welch’s bound. Similarly, if you want to communicate through a high order multiple scattering medium, the multiple scattering can actually help you achieve higher transfer rates. Thus, in both these cases, multiple scattering is more a friend than a foe.

In the second part of this article, we will consider the case where we are not interested in an interferometric construction of seismic data but rather want to decode the original sources, including their multiply-scattered waves, directly as we consider the marine simultaneous source separation problem. As we will see, geophysicists have a few more tricks they can bring to bear on the seismic encoding and decoding problem.

Also in this Series

Codes and Ciphers – PART II. Simultaneous Source Separation. Guest Contributors: Johan O. A. Robertsson and Dirk-Jan Van Manen (ETH Zurich, Institute of Geophysics). Editors: Lasse Amundsen and Martin Landrø.

References

Derode, Arnaud, Philippe Roux, and Mathias Fink. “Robust acoustic time reversal with high-order multiple scattering.” Physical review letters 75, no. 23 (1995): 4206.

Derode, Arnaud, Arnaud Tourin, Julien de Rosny, Mickaël Tanter, Sylvain Yon, and Mathias Fink. “Taking advantage of multiple scattering to communicate with time-reversal antennas.” Physical Review Letters 90, no. 1 (2003): 014301.

Lin, Fan-Chi, Michael H. Ritzwoller, and Roel Snieder. “Eikonal tomography: surface wave tomography by phase front tracking across a regional broad-band seismic array.” Geophysical Journal International 177, no. 3 (2009): 1091-1110.

Shannon, C. E., 1949, Communication in the presence of noise: Proc. Institute of Radio Engineers, 37(1), 10–21.

Simon, Steven H., Aris L. Moustakas, Marin Stoytchev, and Hugo Safar. “Communication in a disordered world.” Physics Today 54, no. 9 (2001): 38-43.

Welch, Lloyd R. “Lower bounds on the maximum cross correlation of signals (Corresp.).” Information Theory, IEEE Transactions on 20, no. 3 (1974): 397-399.