329 teams from around the world signed for the competition. 148 of them submitted at least one solution. 2200 solutions were scored against the blind well dataset of 10 wells. In the end there can only be 1 winner.

Olawale Ibrahim, an applied geophysics student from the Federal University of Technology in Akure, Nigeria won the competition, but it should be stressed that the final scores were incredibly close and when looking at the predictions on the well logs one realises how little difference there is between the top 5-7 models.

What was it about?

So, as a recap, the ultimate aim of the competition was to predict lithology using digital well logs. In order to have consistent data set against which the algorithms could be benchmarked, Explocrowd made a hand-crafted lithology interpretation using inhouse data, completion logs, mud logs, and of course the wireline curves. I2G.cloud provided the lithology for 14 wells since they wanted to support the competition.

A global community

This kind of initiatives not only invites a global community to participate, it also provides open-source code that can be further built on. Olwale adds: “Special thanks to the organizers and sponsors for the competition. The competition and data is a great step into ensuring more open source contributions in geoscience both from individuals and O&G companies especially. It was fun participating and I hope to get more opportunities to put the experience gained in solving similar challenges in the future.”

All data, submitted machine learning codes and final scores are here

How good is the machine at predicting lithology and what can we learn from that?

It appears that the machine provides something like a 80-95% percent solution in most cases (see examples below). “It can be questioned if a 100% solution can ever be achieved given the systemic uncertainty in assigning lithology labels in the first place. Real ground truth data is hard to come by, with core data being too biased towards sands and the cuttings data suffering from vertical resolution loss,” Peter Bormann, one of the organisers, writes in his blog about the competition.

Rotliegend limestone

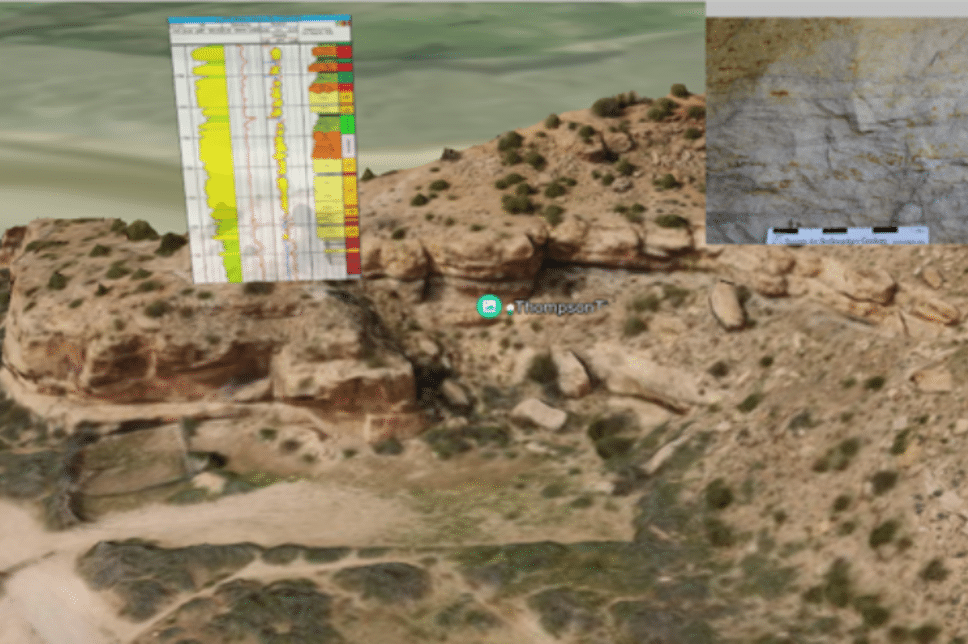

The Rotliegend interval in well 16/2-7 (Johan Sverdrup field) illustrates how useful ML can be, as Peter describes in his blog. Intriguingly, none of the models picked the apparent limestone in the centre of a sandstone sequence (see figure, with the “hand-picked” lithology on the left).

Going back to the mud logs it turned out that the limestone label supplied by FORCE was entirely wrong. The FORCE interpreters were guided by the wrong lithology symbol used on the mudlog.

It turns out that this interval is cored and the entire core consists of a lovely conglomerate and breccia.

This is just one example where all the machine learning models disagreed with the label given by FORCE. In several of these cases of total disagreement it was found that the label can be disputed and the recently acquired NOROG cuttings images helped to resolve an apparent interpretation conflict.

HENK KOMBRINK