Combining streamer and node technology to provide high resolution seismic in congested areas with a fast turnaround.

This year, Polarcus was challenged by Dubai Petroleum to provide a high-resolution, fast-turnaround, seismic image in a highly congested shallow water survey area. The targets were stratigraphic plays in the Mishrif and Thamama carbonate formations typical of the Arabian Gulf area. The objective was to image an area in a short time-frame that would enable infill well placement.

This challenge could only be achieved by careful planning of the acquisition methods that underlie the processing, and through the processing and data imaging itself.

Polarcus Priority Processed PreSTM Seismic

A. Time slice Priority Processed PreSTM streamer data, showing blank due to obstruction. Green line shows location of seismic in image B.

B. Crossline of preliminary merged Hybrid Streamer/OBN, filling the blank.

C. The obstruction.

© Polarcus / Dubai Petroleum.

This seismic foldout was taken from GEO ExPro Vol. 16, Issue No. 6. You can download the original PDF of the issue here and you will find this foldout on pages 64-68.

Hybrid Thinking: Providing Fast,High-Resolution Seismic in a Congested Area

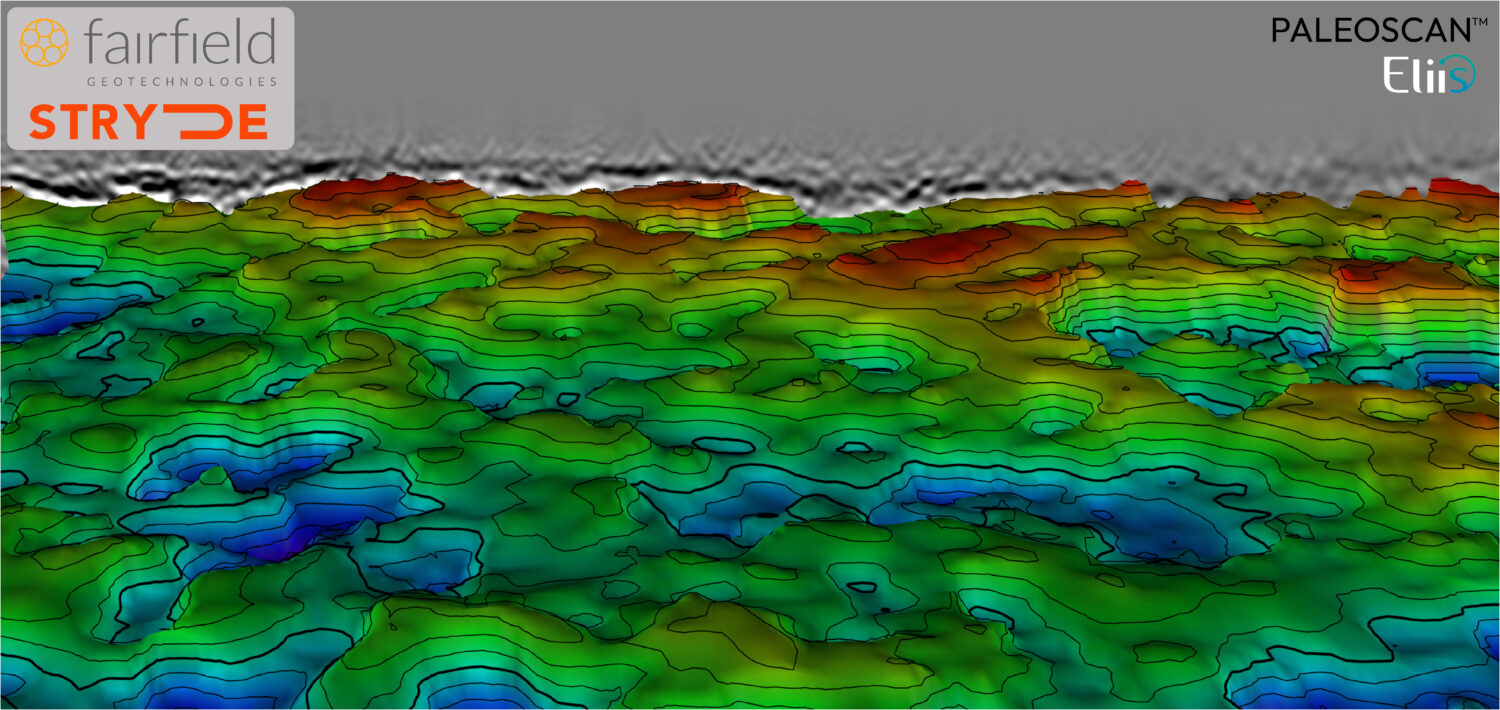

The acquisition technique chosen to provide a high-resolution, fast-turnaround, seismic image in a highly congested shallow water area was a hybrid survey, using an XArray™ Penta Source streamer design with oceanbottom nodes (OBN) around the obstructions to fill in the holes. This hybrid approach would give the best of both the streamer and OBN worlds: fast, reliable data from the streamer, and flexible receiver placement around the obstructions from the OBN.

A) Field fold coverage map of streamer acquisition. B) Field fold coverage map of hybrid acquisition. © Polarcus / Dubai Petroleum.

Given the symmetrical bin sizes that Penta Source gives, economies in cost and duration were achieved by shooting orthogonal lines that would ‘box-in’ the obstructed areas, minimising the node effort. The nodes were used to replicate the inline streamer geometry, not to produce a classic orthogonal-geometry survey, which further reduced the cost and duration of the project.

Make it Good

High-resolution meant a target frequency range of 2–100 Hz, which would allow the interpretation of the pinch-outs and reef build-ups of the main structures. To achieve this, high-trace density streamer data was combined with overlapping shots, providing a natural bin size of 6.25 x 6.25m with fold of 84. This equated to over 2 million traces per square kilometre, ensuring that the required signal-to-noise ratio (SNR) could be achieved with the appropriate processing.

Make it Fast

The processing turnaround time required was ten weeks after the last shot. Typically, this would mean an onboard fast-track but, in this case, that approach would have failed for several reasons. The high-trace density would mean that processing onboard would have to rely on trace-decimation so that the data volumes would match the onboard computing power, but that would obviously negate the advantages of the high-trace density, while a conventionally processed volume executed onshore would be too late to meet the drilling timetable. The high-trace density is achieved with five sources, which can only work if the shot interval is smaller, at four seconds or 8.33m, than a traditional survey with nine seconds or 18.75m. This leads to the shots overlapping each other, and to proceed with processing they need to be separated by deblending. This also requires significant compute resources and would not be possible with an onboard computer system. The deblending process itself has benefits in not only removing interfering energy from the next shot but also the ‘noise’ of the previous shot – mostly multiples at this point – which adds to an already heavily multiple-contaminated dataset.

A) Crossline of Priority Processed streamer data, with blank due to obstruction. B) Crossline of preliminary merged hybrid streamer/OBN filling the blank. © Polarcus / Dubai Petroleum.

The challenge in these very shallow waters (less than 20m deep) are the surface multiples and the strong interbed multiple from the carbonates, which dominate and obscure the underlying structures, not to mention subtle stratigraphic plays. The best way to process data in these circumstances is to interact with the interpretation team, who can use their experience and well-ties to guide the processing and, crucially, the velocity picking. Collaboration in this regard is challenging, as the opportunity to live screen-share or meet face-to-face with a remote onboard team is limited.

To overcome the onboard compute limitations and to maximise collaboration, a cloud-based processing workflow was proposed. Data could either be transferred via satellite directly from the vessel or shipped to shore via boat on disk packs and then transferred to a dedicated processing cloud. Capitalising on the project being at close proximity to the shore, the latter option was chosen as the most cost-effective solution. Equipped with significant compute power, the cloud solves the first problem of onboard limitation.

Enabling onshore and offshore teams to access the data, the cloud also resolves collaboration obstacles. Data processors can work remotely from anywhere in the world, provided that they have an internet connection. For this particular project, Polarcus placed their processors in the company’s headquarters in Dubai, conveniently located close to the client’s offices. The proximity of the teams was beneficial not only in driving the processing but also to the asset team’s confidence in the final images. The live collaboration throughout the project assured the teams of the integrity of the data.

Given that the processing was performed on remote computers, processors can easily be embedded in the client’s own offices for similar projects. Alternatively, the client’s own processing and interpretation experts can be granted access to the full fidelity data set via downloads or web-browsers.

What About the Nodes?

The OBN part of the survey was acquired after the streamer acquisition was completed. Indeed, the node placement was decided based on the actual streamer coverage achieved, rather than pre-plot coverage around obstructions that can often be difficult to predict. These sections were not part of the primary area of interest, so processing of this data was not considered a priority. Instead, the streamer and node data would be merged during the traditional onshore processing.

The Nitty Gritty

This article has already touched on the processing aspect, but there are further benefits that the unrestricted compute power of cloud processing can bring to a project such as this.

Already mentioned was the deblending of the data and the demultiple sequence which included SRME, shallow water demultiple, tau-p deconvolution and radon methods.

The denoise sequence could be made substantially more extensive than normal, addressing a number of specific noise types from environmental to strum noise and specifically streamer turn noise, as the vessel navigated past surface obstructions. This ensures the signal was optimal for the deghosting stage where a good SNR ensures the highest bandwidth is recovered. Like any good processing sequence, deghosting reduces the complexity of the signal for both the primary reflections and the multiples of them. This makes the demultiple process easier, as the adaptive subtraction has not just a simpler wavelet to deal with but also a more stable one, with sea-surface and tow depth variations removed.

Another crucial step was the ability to apply 4D regularisation. This creates traces at the exact centre of the CMP bin, where some noise rejection can occur, but the main reason for doing this is to honour the requirement of migration that the spatial sampling is regular. The better this process is, the less migration noise is generated; again improving the SNR of the data.

The migration itself could be run without trace or temporal decimation, and took into account vertical transverse anisotropy (VTI) with a picked anisotropic ‘eta’ field.

Did it Work?

So, was it simply good planning and the power of the cloud? No, the good result came from the people involved – the crew onboard the vessel, the processing team and the client, who remain core to any project, regardless of its nature.

Initial indications deemed the project a success and show that the objectives were met. This was achieved by taking the onboard fast-track data and redefining it in a new age. We call it Priority Processing.

Further Reading from Polarcus on Seismic Imaging

The Future of Marine Seismic Acquisition

Ed Hager, Polarcus

Some thoughts on the future of marine towed streamer seismic acquisition for offshore oil and gas exploration.

This article appeared in Vol. 16, No. 2 – 2019

Pre-Salt Seismic: The Santos Basin

David Contreras Diaz and Marc Rocke; Polarcus

The next phase for Santos Basin appraisal and development will require new seismic data that captures the pre-salt accurately.

This article appeared in Vol. 15, No. 4 – 2018