‘f u cn rd ths, u cn gt a gd jb n cmptr prgrmmng.’ Anonymous

Supercomputers help geophysicists to analyse vast amounts of seismic data faster and more accurately in their search for oil and gas. Seismic surveying is a merger of big data and big computing, and the seismic industry is expected to be an early adopter of new and fast computing technologies. Majors like Eni, Total and BP have rolled out mega-supercomputing centres for seismic imaging. In March 2015, PGS installed a five-petaflop supercomputer, the most powerful in the seismic industry. By 2020 we will have exaflop computers doing a quintillion (1018) calculations per second.

Cutting-edge research and development (R&D) is a competitive differentiator for many organisations, allowing them to attract leading talent and solve some of the world’s largest challenges. Supercomputing is revolutionising the types of problems we are able to solve in all branches of the physical sciences. Almost every university and major E&P company host some kind of supercomputing architecture. The primary application of supercomputing in E&P is seismic imaging, while secondary applications are reservoir simulation and basin simulation. The availability of computing resources is only going to increase in the future and as a result it is important to know the primary concepts behind supercomputing.

What is a Supercomputer?

The official definition of a supercomputer is a computer that leads the world in terms of processing capacity – speed of calculation – at the time of its introduction. It is an extremely fast computer whose number-crunching power at present is measured in hundreds of billions of floating point operations. Today’s supercomputer is destined to become tomorrow’s ‘regular’ computer. For many, a better definition of supercomputer may be any computer that is only one generation behind what you really need!

Supercomputers are made up of many smaller computers – sometimes thousands of them – connected via fast local network connections. Those smaller computers work as an ‘army of ants’ to solve difficult scientific or engineering calculations very fast. Supercomputers are built for very specific purposes. To fully exploit their computational capabilities, computer scientists have to spend months, if not years, writing or rewriting software codes to train the machine to do the job efficiently.

IBM’s Watson computer system competed against Jeopardy’s two most successful and celebrated contestants – Ken Jennings and Brad Rutter – in 2011. What made it possible for Watson to win was not just its processing power, but its ability to learn from natural language. (Source: © IBM)Supercomputers are expensive, with the top 100 or so machines in the world costing upwards of US$20 million each.

IBM’s Watson computer system competed against Jeopardy’s two most successful and celebrated contestants – Ken Jennings and Brad Rutter – in 2011. What made it possible for Watson to win was not just its processing power, but its ability to learn from natural language. (Source: © IBM)Supercomputers are expensive, with the top 100 or so machines in the world costing upwards of US$20 million each.

The terms supercomputing and high-performance computing (HPC) are sometimes used interchangeably. HPC is the use of supercomputers and parallel processing techniques for solving complex computational problems.

Today’s #1 supercomputer is Tianhe-2. The first scientific supercomputer was ENIAC (Electronic Numerical Integrator and Computer), constructed at the University of Pennsylvania in 1945. It was about 25m long and weighed 30 tons. Two famous recent supercomputers are IBM’s Deep Blue machine from 1997, which was built specifically to play chess (against Russian grand master Garry Kasparov), and IBM’s Watson machine (named for IBM’s founder, Thomas Watson, and his son), engineered to play the game Jeopardy.

Computer Speed

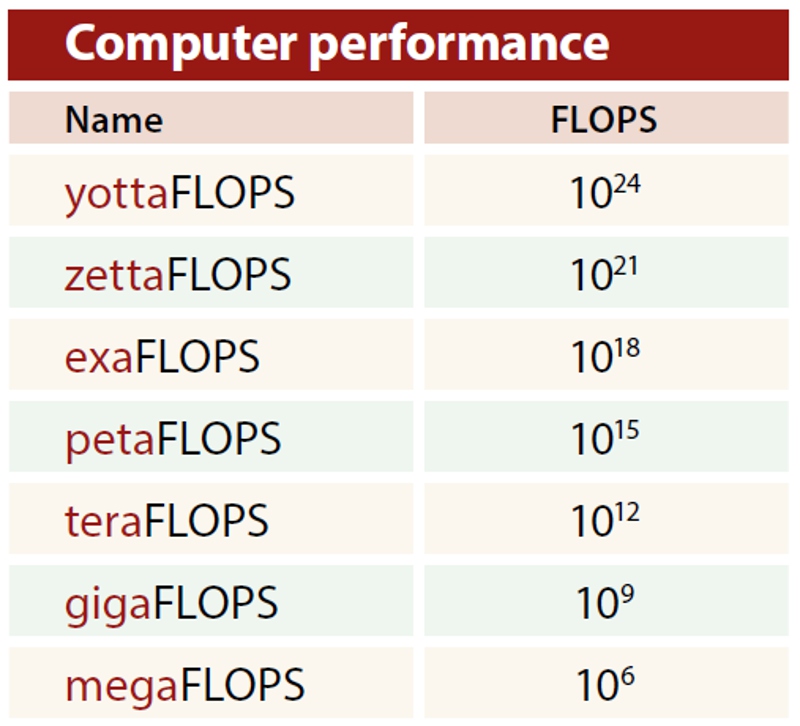

Terminology explained.The definition of a supercomputer is defined by processing speed. Computer speed is measured in Floating Point Operations Per Second (FLOPS). Floating point is a way to represent real numbers (not integers) in a computer. A floating point operation is any mathematical operation (addition, subtraction, multiplication, division, etc.) between floating point numbers.

Terminology explained.The definition of a supercomputer is defined by processing speed. Computer speed is measured in Floating Point Operations Per Second (FLOPS). Floating point is a way to represent real numbers (not integers) in a computer. A floating point operation is any mathematical operation (addition, subtraction, multiplication, division, etc.) between floating point numbers.

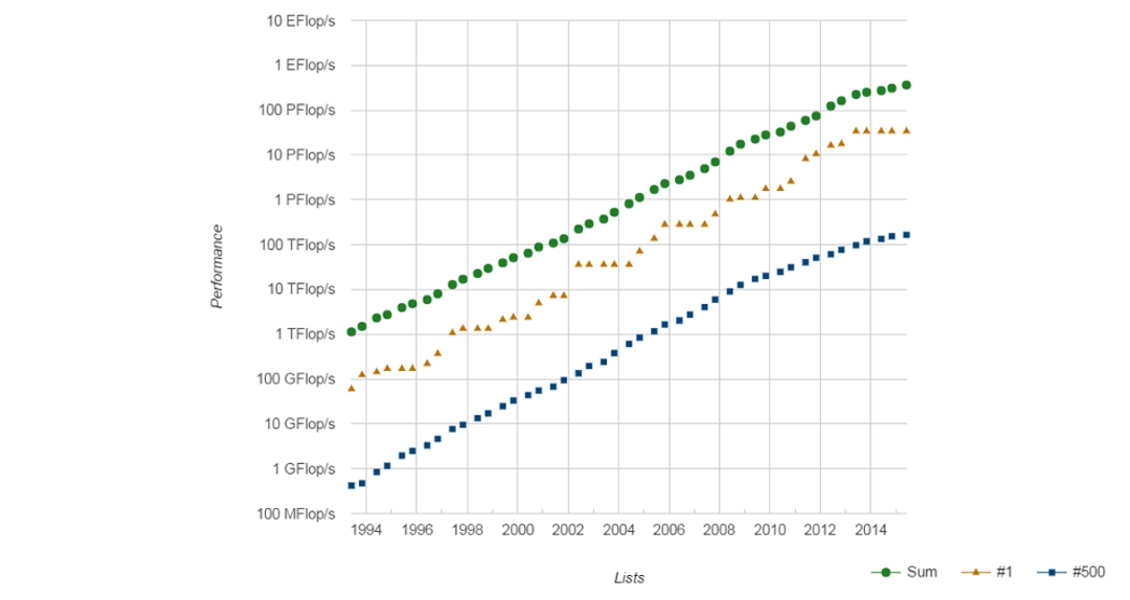

To see the current computing leaders, check out the website: http:// www.top500.org, which lists computers ranked by their performance. It lists as of June 2015 PGS’ supercomputer, named Abel after the famous Norwegian mathematician Niels Henrik Abel (1802– 1829), at #12 with a peak performance of 5.4 petaflops and with 145,920 cores – more than five thousand trillion (or quadrillions) calculations per second! Eni E&P’s HPC2 supercomputer is #17 with a peak performance of 4.6 petaflops and with 72,000 cores. Total E&P’s machine Pangea is ranked at #29 with peak performance of 2.3 petaflops, and boasts 110,000 processor cores, 442 terabytes of memory, and 7 petabytes of disk storage.

Entry onto the TOP500 list is voluntary, and some companies intentionally do not participate to keep information about their computing capacity hidden from competitors. As a result, it is difficult to know which really are the biggest supercomputers in the E&P industry. BP is known to be in the forefront of supercomputer technology with a total processing power of 2.2 petaflops, 1,000 terabytes of memory, and 23.5 petabytes of disk space. Exxon Mobil has also touted its supercomputer in an in-house magazine, saying that its calculating abilities are in the ‘next-generation’ quadrillions per second.

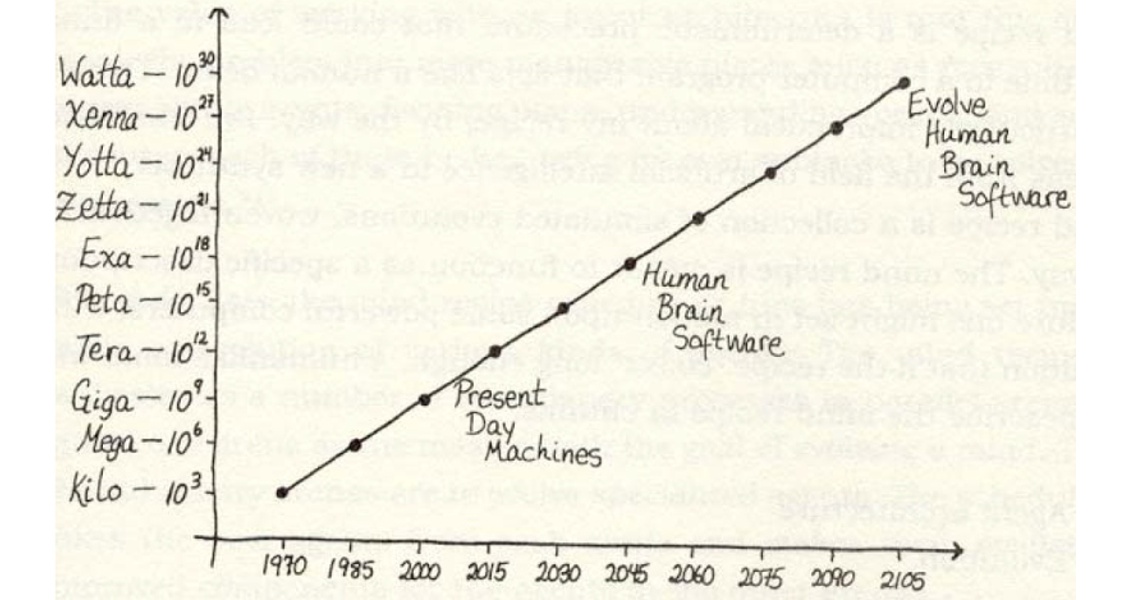

We can see from the graph (below), on a logarithmic scale, quite a linear progression in computing speed which implies exponential growth. This result is a basic outcome of Moore’s Law. In 1964, the engineer Gordon Moore noticed that the number of transistors per computer chip seemed to be doubling approximately every two years. The law extends to the speed of our computers as well. Computers of the early 1970s ran in the kilohertz range, machines of the mid-1980s ran in the megahertz range, computers in the early 2000s ran in the gigahertz range, and in the early 2010s computers are running in the terahertz range: a thousand-fold speed-up every 10 years, and a billion-fold speed-up in the course of 40 years.

In his 2005 book The Lifebox, the Seashell, and the Soul: What Gnarly Computation Taught Me About Ultimate Reality, the Meaning of Life, and How to be Happy (Thunder’s Mouth Press), Rudy Rucker (1946–), an American mathematician, computer scientist, science fiction author and philosopher, made an interesting drawing of what may happen if this trend continues.

Energy Usage and Heat Management

The biggest supercomputers consume egregious amounts of electrical power and produce so much heat that cooling facilities must be constructed to ensure proper operation. Tianhe-2, for example, consumes 24 MW of electricity. Global power consumption is approximately 16 terrawatts, which means that Tianhe-2 uses 0.00015% of the world’s energy consumption. The cost to power and cool the system is significant, e.g. 24 MW at $0.10/kWh is $2,400 an hour or about $14 million per year. The list Green500 (www.green500.org) provides a ranking of the most energy-efficient supercomputers in the world.

On the June 2015 Green500 list, the Shoubu supercomputer from RIKEN (Institute of Physical and Chemical Research in Japan) earned the top spot as the most energy-efficient (or greenest) supercomputer in the world, surpassing seven gigaflops/watt (seven billions of operations per second per watt). Assuming that Shoubu’s energy efficiency could be scaled linearly to an exaflop supercomputing system, one that can perform one trillion floating-point operations per second, such a system would consume in the order of 135 MW. Shoubu is ranked #160 on the June 2015 edition of the TOP500 list.

Supercomputers are either air-cooled (with fans) or liquid-cooled (with a coolant circulated in a similar way to refrigeration). Either way, cooling translates into very high energy use and expensive electricity bills. The water cooling system for Tianhe-2 draws 6 MW of power.

Parallel Processing

An ordinary computer is a general-purpose machine that inputs data, stores and processes it, and then generates output. A supercomputer works in an entirely different way, typically by using parallel processing instead of the serial processing that an ordinary computer uses. Instead of doing one thing at a time, it does many things at once. Most supercomputers are multiple computers that perform parallel processing, where the problem is divided up among a number of processors. Since the processors are working in parallel, the problem is usually solved more quickly even if the processors work at the same speed as the one in a serial system.

In the next article on supercomputing, we will elaborate on parallel processing, CPUs (central processing units), and GPUs (graphics processing units). GPU developments were primarily driven by the demand for more awesome video games. Now, in order to support the needs of physical simulations, GPUs have advanced significantly to do mathematical computations. If you think of your computer as an army, CPUs would be the generals – highly capable and extremely efficient at command and control. GPUs would be the foot soldiers, massive numbers of production units but not as capable at decision-making.