Improving the analysis of risk in field management is the Holy Grail of reservoir modelling and in recent years history matching has become a vital tool in this process. As explained by Jon Saetrom, Chief Science Officer of Resoptima, an independent technology company delivering software solutions and consultancy services to the oil and gas industry, the main purpose of history matching is “to generate geological and reservoir simulation models which honour both static and dynamic data measurements and can provide reliable predictions regarding the future state of the reservoir.” The assumption is that if a reservoir model reacts in the same way as the actual wells when under historical constraints, then it will behave the same way as the actual wells under future constraints; but if it does not correctly predict past observations, then it is very unlikely that the reservoir model will accurately predict future production data. “Using methods and tools which can help improve the model predictability is therefore key to better reservoir management,” Jon concludes.

Building Models – and Uncertainties

The process of data accumulation begins as soon as a prospect is identified, with magnetic and gravimetric data plus seismic analysis feeding into a basic model of the geological history, with some regional estimates of the reservoir’s potential quality. More data comes at the appraisal stage as the first well is drilled, when the development geologists and petrophysicists put together a static model. Each stage in this process inherently carries a level of uncertainty due to this wide range of data, so subsurface teams generate multiple equally plausible static geo models, which try to give an estimate of the uncertainty.

Uncertainty in reservoir modelling – base case model. Each branch of the tree represents different modelling choices; e.g. choice of seismic processing vendor, different geological concepts, well-log interpretation, choice of lithofacies modelling technique, etc. Each leaf represents individual reservoir models – all honouring the same data measurements. Hence, making decisions based on one single base case model is a big leap of faith.At this stage, for practical reasons the geologist traditionally provides one geo model on which the engineers will develop a dynamic model ready for simulation and history matching. This initial step results in a huge loss of potential subsurface data and an appreciation of subsurface uncertainty and their relationships is lost.

Uncertainty in reservoir modelling – base case model. Each branch of the tree represents different modelling choices; e.g. choice of seismic processing vendor, different geological concepts, well-log interpretation, choice of lithofacies modelling technique, etc. Each leaf represents individual reservoir models – all honouring the same data measurements. Hence, making decisions based on one single base case model is a big leap of faith.At this stage, for practical reasons the geologist traditionally provides one geo model on which the engineers will develop a dynamic model ready for simulation and history matching. This initial step results in a huge loss of potential subsurface data and an appreciation of subsurface uncertainty and their relationships is lost.

The engineers assess the ranges of uncertainty in the single model and eliminate what are perceived to be those with least influence, driven by a need to reduce the number of uncertainties so as to decrease the computing power and time needed to achieve a history match during simulation. Finally, the history matched result is often achieved through ‘altering’ the model to fit the data – similar to the traditional curve fitting approach – potentially leading to a model that is geologically implausible. Thus the process of ‘anchoring’ to a single base case model, eliminating uncertainties established in the modelling process in order to cut down on computational time and focusing on the history match at the expense of the underlying geology, results in a poor ability to accurately predict the future field development.

The single history matched model is used to simulate the field’s response to production, taking into account, for example, pressure changes, fluid dynamics and other active well data. Actual behaviour during production is matched to the best fit dynamic model and once a good match is achieved, the model is run forward in time in order to make projections of production and pressures. The model will help to identify where there is missed oil and a need for infill drilling, whether investment in new drilling is required, or whether field depletion and water cuts make further investment unviable.

If the predictions made by this single reservoir model are unreliable, the consequences for an oil company can be quite dramatic when the cost of drilling a single well can be $100 million or more.

The Range of Uncertainty

The ideal solution would therefore be to develop a system that allows many more models to be history matched and provide a greater – and more accurate – range of the uncertainty space.

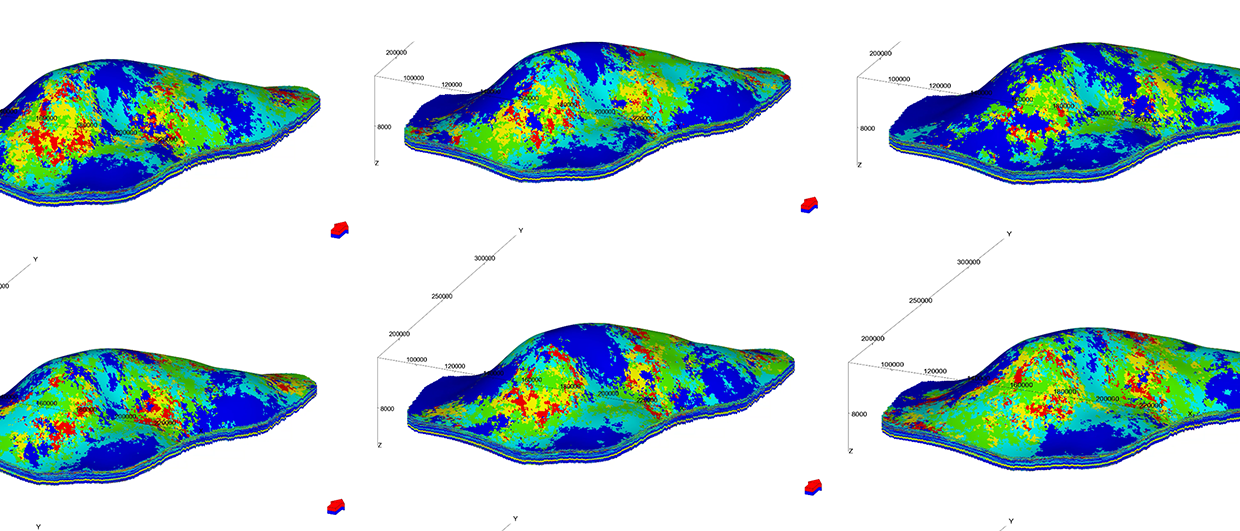

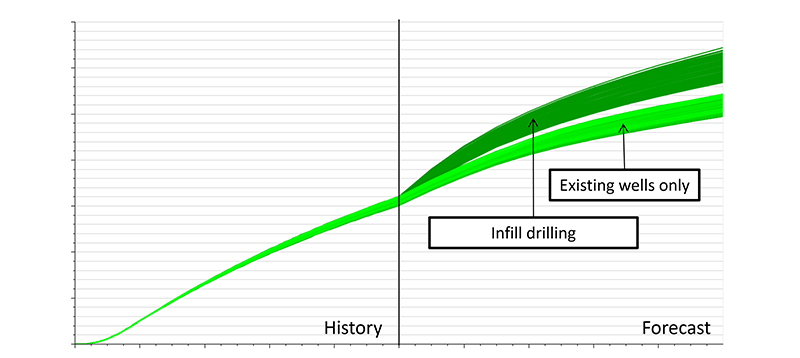

Reservoir management under uncertainty. Multiple reservoir models are generated, all honouring both static and dynamic data (in the historic period). Even if the set of models all honour the same data measurements, there is still significant uncertainty in the production forecast. However, by using multiple models it is a straightforward task to quantify the prediction uncertainty and assess the risk of various investment decisions (in this case, infill drilling).The E&P industry has been working on this since 2001, adopting the improved algorithm Ensemble Kalman Filter (EnKF), which is widely used in many fields such as weather forecasting and the car industry. In the ensemble-based approach, the full range of geo models is taken forwards, capturing key uncertainties in connectivity and reservoir quality where previously there was just one. Hence, with this approach there is a natural link between geologists and engineers, which can greatly improve how teams collaborate together building the range models and populating the static and dynamic properties. Each model realisation is geologically sound and represents one possible outcome of the various modelling choices – one leaf node in the uncertainty tree. Hundreds of uncertainties are captured and taken forwards to the history matching stage. This results in a set of reservoir models which are geologically consistent and honour the dynamic production data, which ultimately leads to improved predictability.

Reservoir management under uncertainty. Multiple reservoir models are generated, all honouring both static and dynamic data (in the historic period). Even if the set of models all honour the same data measurements, there is still significant uncertainty in the production forecast. However, by using multiple models it is a straightforward task to quantify the prediction uncertainty and assess the risk of various investment decisions (in this case, infill drilling).The E&P industry has been working on this since 2001, adopting the improved algorithm Ensemble Kalman Filter (EnKF), which is widely used in many fields such as weather forecasting and the car industry. In the ensemble-based approach, the full range of geo models is taken forwards, capturing key uncertainties in connectivity and reservoir quality where previously there was just one. Hence, with this approach there is a natural link between geologists and engineers, which can greatly improve how teams collaborate together building the range models and populating the static and dynamic properties. Each model realisation is geologically sound and represents one possible outcome of the various modelling choices – one leaf node in the uncertainty tree. Hundreds of uncertainties are captured and taken forwards to the history matching stage. This results in a set of reservoir models which are geologically consistent and honour the dynamic production data, which ultimately leads to improved predictability.

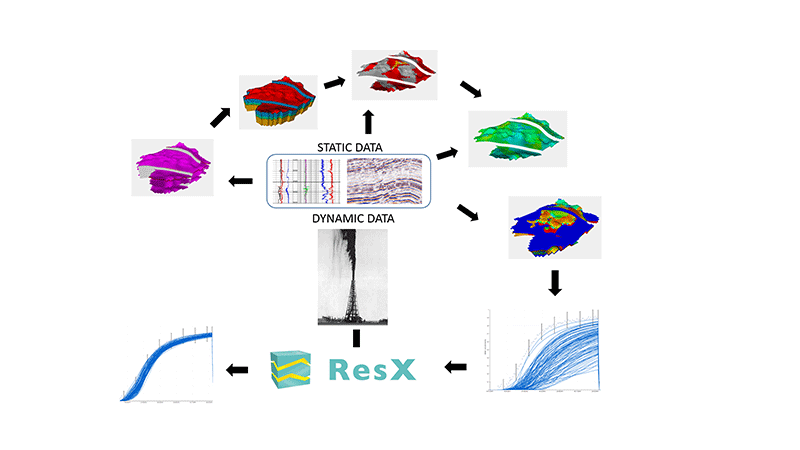

Since its launch in 2010, Resoptima has been working on a suite of software aimed at improving reservoir management. In 2013 it launched ResX, the industry’s first commercial software platform for computer-assisted history matching and production forecasting using ensemble-based methods. ResX uses the latest developments in data-assimilation techniques to generate highly reliable reservoir models honouring both static and dynamic data. Taking advantage of high performance computing facilities by running flow simulations in parallel, results can be obtained in a highly efficient manner. This new and innovative solution enables data-driven decisions by utilising the increasing volume and detail of data captured in the E&P industry, allowing companies to generate reliable future production forecasts with a thorough quantification of uncertainty, and focus on making the right decisions to maximise recovery, while minimising financial risk.

“The workflow streamlines the process from geo modelling to flow simulation. This means that we can easily test different geological concepts or alternative modelling uncertainties and quickly see the response in production profiles,” Jon explains. “Using the ensemble methodology, we are able to capture the prior uncertainty in a model by sampling static uncertainties such as porosity, permeability and net-to-gross, with dynamic uncertainties derived through the integration of production data.”

“The application of an ensemble based approach through ResX allows E&P companies to drastically improve the reliability of their reservoir models, quantify the uncertainty in future production forecasts, and use simulation of future production scenarios as key input to investment and operational decisions,” says Tore Felix Munck, Managing Director of Resoptima.

Geologically Sound Matches

In addition to providing high quality and predictable history matches, the company believes that ensemble based history matching greatly speeds up the time required for the matching process. It also scales very well with model size and complexity, making it ideal for large super fields with many years of production history. With efficient use of computer resources and man hours, ensemble techniques provide for competent end-to-end workflows that lead to an increased confidence in reservoir development planning and an optimisation of decision-making processes, potentially resulting in substantial cost savings for exploration and production companies.

ResX was commercially released in October 2013 and the technology has been well received by the industry. “Since its initial release, the technology has been used by several well established E&P companies. We clearly see a demand for tools which streamline the reservoir modelling and history matching process, and we are currently working closely together with the industry to further improve the technology,” Tore says.

Ensemble-based history matching using ResX. An automated workflow streamlines the process, moving from geological modelling (structural modelling, grid building, facies modelling, petrophysical modelling and water saturation modelling), conditioning on static input data (i.e. seismic and well logs) to flow simulation and dynamic data conditioning using ResX.Ensemble-based history matching in reservoir modelling has proved it can provide high-quality, geologically sound and reliable history matches, with superior results when compared to other methods, and a thorough quantification of uncertainty. The method can easily be scaled to fit large, complex models and it provides reservoir models with a high degree of predictability, resulting in improved reservoir management.

Ensemble-based history matching using ResX. An automated workflow streamlines the process, moving from geological modelling (structural modelling, grid building, facies modelling, petrophysical modelling and water saturation modelling), conditioning on static input data (i.e. seismic and well logs) to flow simulation and dynamic data conditioning using ResX.Ensemble-based history matching in reservoir modelling has proved it can provide high-quality, geologically sound and reliable history matches, with superior results when compared to other methods, and a thorough quantification of uncertainty. The method can easily be scaled to fit large, complex models and it provides reservoir models with a high degree of predictability, resulting in improved reservoir management.

Whether this means that for reservoir engineers the search for the Holy Grail is over remains to be seen. However, since this is a business where the wrong decision can easily mean a loss of $100 million or more, any effort which can improve the uncertainty quantification and risk management is likely to be welcomed throughout the industry.