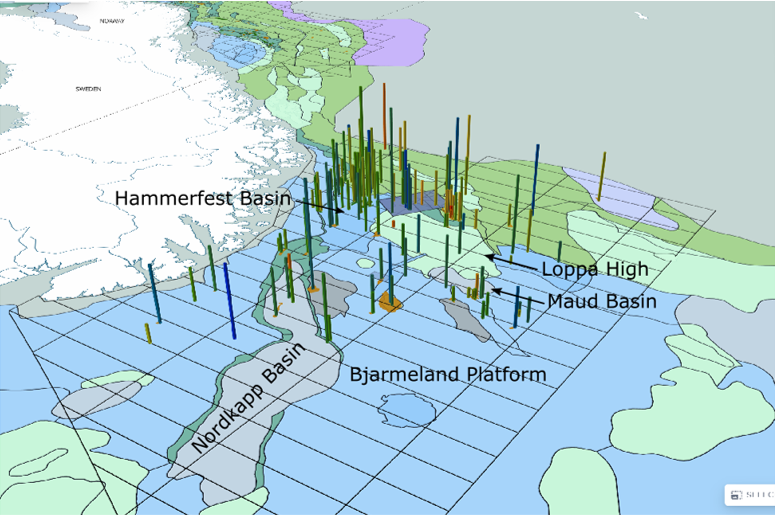

For sedimentologists, describing the grain size of core samples is key because it provides valuable information about the sedimentary environment, depositional processes, petrophysical properties, and the history of the area where the samples were collected.

However, sedimentological descriptions involve interpretation and can be influenced by subjective factors such as the observer’s expertise and perception. Observing individual grains on a cored surface is not always straightforward, let alone sampling an entire length in detail if you would just have a handless at your disposal. Equally, where a single observation of the “average” grain size per foot of core can be practice when it comes to manual core interpretation, it is the sorting and grain size distribution along the cored length of a reservoir that can reveal a lot more information about its flow characteristics

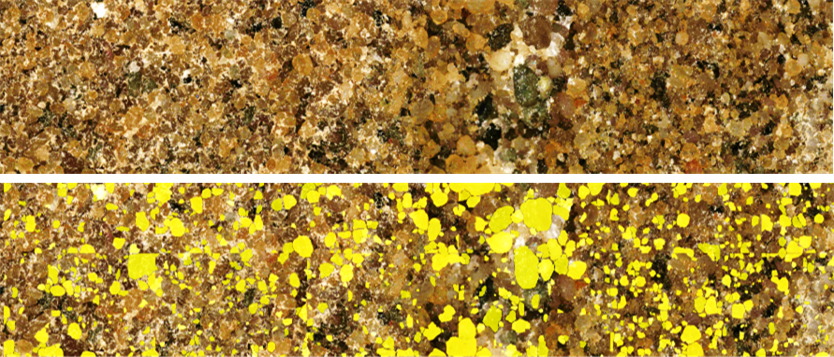

To make the process of grain size determination more objective, Epslog has developed a specific methodology where ultra-high resolution images are taken continuously along the core. Using these images, a deep machine learning algorithm was subsequently trained to perform a segmentation of each identifiable grain. A post-processing algorithm then sorts every detected grain into a predefined bin, such that a distribution grain size profile can be established for each cm of core.

The methodology could only be developed now because of the emergence of deep machine learning models and the ability to store large databases.

Sedimentologist can use calculated grain size to support their decision, work more rapidly and more efficiently. Such kind of approach opens the door to a more robust and efficient way of working and can be extended to other geological descriptions based on photographs.